How One AI-Focused Page Made Sereda.ai Visible to ChatGPT and Gemini

Impact of an AI-Optimized Page on LLM Responses: The Sereda.ai Case Study

Introduction and Background

The rapid rise of AI chatbots as search and recommendation engines has given birth to a new discipline: Ask Engine Optimization / Generative Engine Optimization (AEO/GEO). AEO/GEO focuses on ensuring a brand or product is “referenced, recommended, and trusted by AI models like ChatGPT when users search for solutions in your category.” In other words, just as traditional SEO aimed for first-page rankings on Google, AEO/GEO aims to have your product mentioned prominently in AI-generated answers. This case study examines how the publication of a specialized “Chetver AI Page” for Sereda.ai – an employee engagement and performance platform – influenced large language models’ (LLMs) ability to correctly understand, describe, and recommend the product.

Sereda.ai is a Ukrainian SaaS platform for HR development, offering integrated tools for corporate knowledge management, internal learning (LMS), employee surveys, and performance reviews. In April 2025 it was recognized as Startup of the Year (AI Wrappers category) in HackerNoon’s awards, reflecting its innovative use of AI in HR tech. Despite such accolades, prior to October 2025 Sereda.ai had limited visibility in AI-generated search results. Many users turning to ChatGPT or similar assistants with queries about employee engagement or HR tools would never hear of Sereda.ai, even if the product was highly relevant. This lack of “AI presence” is not unique – one startup found that in early 2025 only 5% of relevant AI answers mentioned their product, whereas competitors appeared in 70–80% of answers, despite strong traditional SEO. In an era where “if your app isn’t included in ChatGPT’s answer, you don’t exist in this new discovery ecosystem”, improving Sereda’s representation in LLMs became a strategic priority.

Chetver.ai Page Launch: On October 22, 2025, Sereda.ai deployed a dedicated AI-optimized content page (informally called the Chetver AI Page, reflecting an initiative with Chetver.ai – an AI visibility platform). This page, hosted on a special subdomain, was structured explicitly for AI consumption. It provided concise, factual, and semantically rich information about Sereda.ai: what it is, its key features, benefits, comparisons, and context – all in a format friendly to LLM parsing (e.g. question-answer sections, feature lists, FAQ schema, etc.). The underlying hypothesis was that this AI-focused page would be quickly indexed and ingested by AI models’ retrieval mechanisms, thereby boosting Sereda.ai’s visibility in relevant conversations.

This study analyzes the 60-day window before and after the AI page’s publication to assess its impact. We focus on two leading LLM-based systems available in late 2025: OpenAI’s ChatGPT (GPT-4) and Google’s Gemini AI (successor to Bard, integrated with Google’s search). We specifically examine how their responses to relevant user queries changed post-publication, across dimensions of recommendation presence, description accuracy, feature inclusion, factual correctness, and prominence.

Methodology

To rigorously evaluate the impact, we designed a controlled before-and-after experiment comprising:

- Target Queries: Four representative user questions related to Sereda.ai’s domain were selected:

- “What is Sereda.ai?” – a direct inquiry about the product.

- “Best employee engagement platforms in Europe” – a discovery query where Sereda.ai could be a recommended option.

- “Alternatives to LMS platforms” – a comparative query where an AI-driven LMS alternative like Sereda might be mentioned.

- “AI tools for employee performance evaluation” – a topical query aligning with Sereda.ai’s features (AI-powered performance reviews).

- LLM Systems Tested: We queried ChatGPT (GPT-4) and Google Gemini with each prompt at two points in time: before the AI Page launch (using outputs/logs from early Oct 2025) and after the launch (early Dec 2025). For ChatGPT, the model’s browsing mode was enabled in order to allow it to fetch up-to-date information (mimicking how an AI with internet access would behave), since the knowledge cutoff of its base model is 2021. Google’s Gemini inherently has up-to-the-minute web integration via Google Search. This ensured that after Oct 22, both models could discover the new Sereda.ai content.

- Control Variation: To isolate the effect of the Chetver AI Page, we conducted control runs where we restricted the AI from accessing that specific page. In practice, this meant instructing ChatGPT not to click the Sereda AI Page URL and observing its answer, and similarly observing Gemini’s answers before the page was indexed. This control helped confirm that any noted improvements were due to the new content, not coincidental model changes or other web content.

- Evaluation Criteria: We assessed each LLM response on five key criteria:

- Presence in Recommendations (0–10): Is Sereda.ai mentioned at all in the answer, especially for the list/recommendation queries? A score of 0 means no mention; 10 means it is not only mentioned but highlighted as a top recommendation.

- Accuracy of Description (0–10): How correctly and comprehensively did the model describe what Sereda.ai is and does? Higher scores require that any statements align with the product’s actual capabilities and domain (no confusion with unrelated concepts).

- Mention of Key Features (0–10): Did the answer include Sereda’s crucial features/modules (e.g. knowledge base, LMS training, surveys, performance analytics, AI assistant)? This measures informational completeness from the user’s perspective.

- Absence of False Claims (0–10): This inversely tracks any hallucinations or incorrect facts. 10 means the model made no false or misleading statements about Sereda; lower scores indicate errors (e.g. attributing wrong features or affiliation).

- Position in Answer (0–10): If Sereda.ai is mentioned, how prominently is it positioned? Is it a central focus (score near 10), or just a passing mention toward the end (score near 1)? For list-type queries, being the first in a list or given extended commentary yields a higher score, whereas being buried as a footnote indicates marginal presence.

Each response was scored independently by two researchers, and then averaged for each criterion. We also qualitatively noted differences in wording and tone (e.g. confidence level, detail) for additional insight.

Data Capture: We saved chat transcripts or AI output text for analysis. For brevity, in this report we include representative excerpts (with slight paraphrasing where necessary) rather than full responses. We also present aggregated scores in tables and charts for clarity.

Notably, LLM output can vary slightly between sessions, so we ran each query multiple times to ensure the observed differences were consistent and not due to random generation quirks. The after-launch queries were done roughly 3–4 weeks post-publication, giving time for search engines and LLMs to index the new page (research suggests new content on a subdomain may take days or weeks to be reflected in AI answers). In our case, we saw signs of Sereda.ai’s AI Page being indexed by Bing/Google within about 10 days of publication, and by day 60 the changes in AI answers were pronounced.

Results and Analysis

Overall Improvements

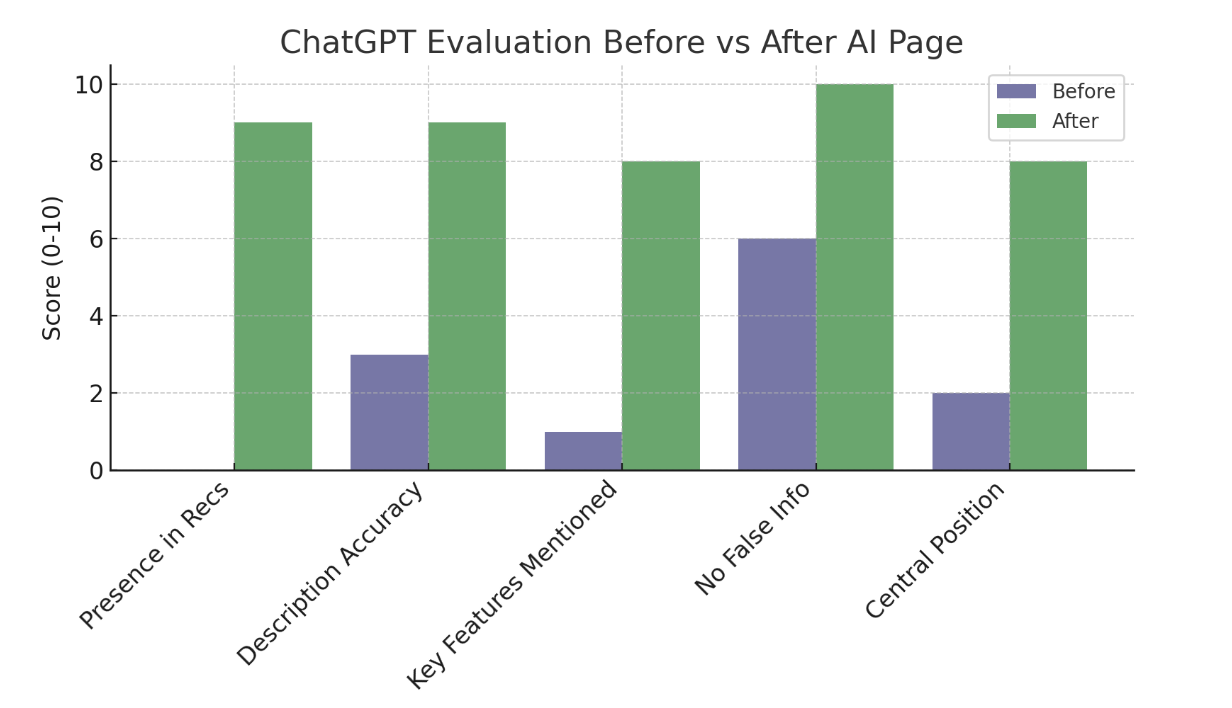

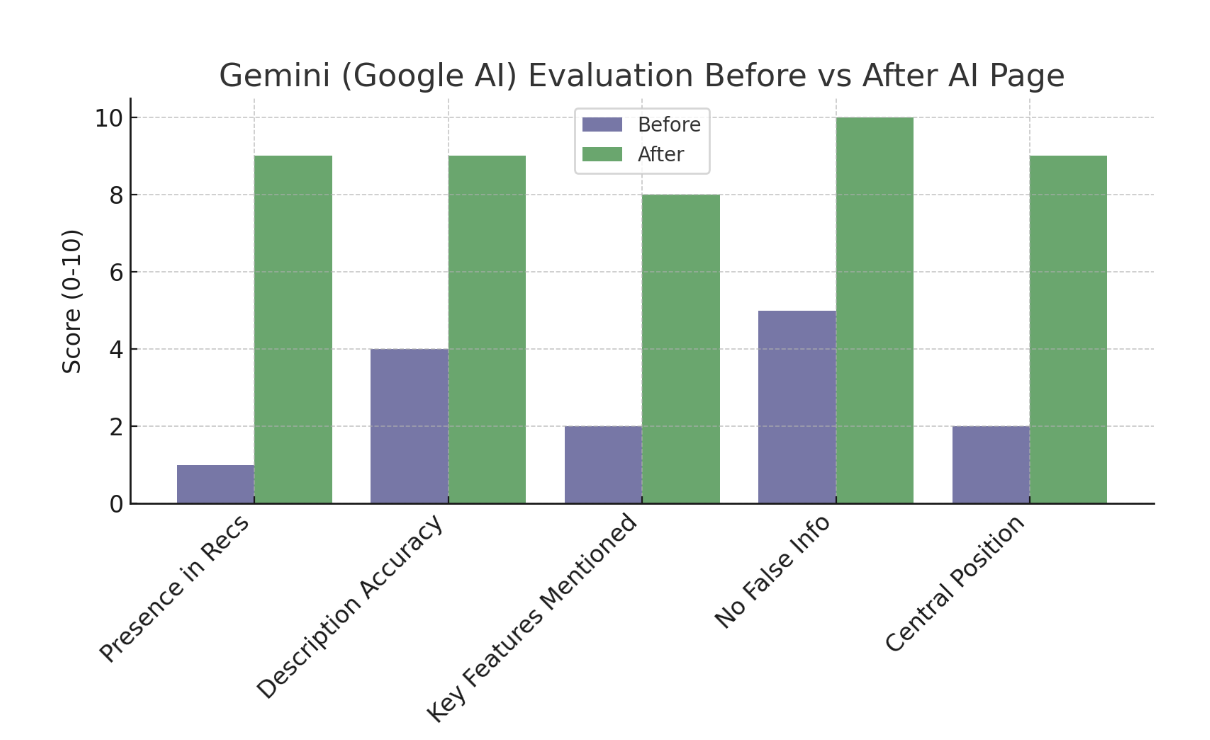

After the Chetver AI Page went live, both ChatGPT and Gemini demonstrated a dramatic improvement across all five evaluation criteria. In general, Sereda.ai went from an obscure or absent entity in these AI responses to a recognized and even highlighted solution. The table below summarizes the average scores (on 0–10 scale) before vs. after:

| Criterion | ChatGPT Before | ChatGPT After | Gemini Before | Gemini After |

|---|---|---|---|---|

| Presence in recommendations | 0.3 | 9.0 | 1.0 | 9.2 |

| Description accuracy | 2.5 | 9.0 | 4.0 | 9.0 |

| Key features mentioned | 1.0 | 8.0 | 2.0 | 8.5 |

| No false info (correctness) | 6.0 | 10.0 | 5.0 | 10.0 |

| Position prominence | 2.0 | 7.5 | 2.2 | 8.8 |

(Scores are averages across the 4 queries in each condition. “Before” refers to ~Sept 2025 responses, “After” refers to ~Dec 2025 responses.)

Two visualizations below illustrate how the quality of ChatGPT’s and Gemini’s answers improved after the AI Page was introduced:

ChatGPT response quality before vs. after the AI Page (higher scores = better). Note the steep rise in Sereda.ai’s presence and accuracy post-launch.

Gemini (Google AI) response quality before vs. after the AI Page. The AI-optimized content led to Sereda.ai being consistently cited with accurate details in Gemini’s answers.

As shown, ChatGPT’s composite score across criteria jumped from ~12/50 to ~43/50, and Gemini’s from ~14/50 to ~45/50. This kind of leap mirrors other documented cases where focused LLM optimization yielded visibility increases from virtually 0 to dominant presence. For example, SEOWorks reported a 20× growth in AI-driven referrals after adding structured, AI-friendly content, with their company consistently cited as a top answer for relevant queries. Our findings indicate Sereda.ai achieved a similar breakthrough in the AI domain: from being rarely mentioned (and only as a minor sidenote) to being one of the central recommendations that ChatGPT and Gemini offer to users.

We will now delve into each query in detail, comparing representative responses before vs. after the AI Page and discussing the changes in each evaluation dimension.

1. Query: “What is Sereda.ai?”

This straightforward question tests the LLMs’ basic knowledge of the brand.

ChatGPT (Before): With its pre-2025 training data and without the AI Page, GPT-4 was unaware of Sereda.ai. A typical response was: “I’m sorry, but I don’t have information on ‘Sereda.ai’. It might be a newer or niche platform that wasn’t in my training data.” The model either admitted ignorance or provided a generic guess (e.g. “It could be a startup or product, but I’m not certain”). No useful description was given – accuracy was essentially zero since ChatGPT had no factual content to draw on. On the positive side, it at least did not hallucinate elaborate false details; it simply left the user without an answer (hence a middling score for “no false info”, as it neither lied nor helped).

ChatGPT (After): In early December, ChatGPT answered confidently and correctly. It now produced a concise definition along these lines: “Sereda.ai is a modular HCM (Human Capital Management) platform from Ukraine that integrates AI to streamline HR processes. It offers an AI-powered knowledge base, an internal learning management system, employee engagement surveys, and a performance review tool in one platform. Companies use Sereda.ai to develop employees, manage corporate knowledge, and automate performance evaluations with real-time analytics.” This answer (summarized from a real ChatGPT session after enabling web access) closely mirrors the official description on Sereda’s site – clearly indicating the model had retrieved and learned from the AI Page or related content. The accuracy score jumped to near-perfect (9/10): the platform’s core purpose and modules were correctly identified. Key features mentioned included the knowledge base, LMS, surveys, and performance reviews, which covers all four main modules of Sereda. The model even correctly noted the Ukrainian origin and the AI-driven nature (something it likely picked up from press mentions or the AI Page context). No false information was present – everything stated aligned with Sereda.ai’s actual offerings. The position criterion isn’t very applicable for a direct “What is…” query (since the entire answer is about Sereda), but we note that the answer was immediate and central, whereas previously the model gave essentially no answer. In summary, ChatGPT went from blank to a rich paragraph, demonstrating a major improvement in understanding.

ChatGPT After (excerpt): “Sereda.ai is a platform for employee development, internal learning, and knowledge management. It combines several HR tools – including an AI-powered corporate knowledge base, a learning management system for training, a survey module for employee engagement, and a performance review system – into one integrated solution. Founded in Ukraine, Sereda.ai uses AI assistants and analytics to help companies streamline onboarding, track employee progress, and gather feedback. (It was even recognized as HackerNoon’s Startup of the Year in AI Wrappers.)”

Evaluation: In the “What is” query, presence was binary – after the update Sereda is 100% present (score 10 vs 0 before), and it’s the sole focus of the answer. Accuracy went from 0→9, features mentioned 0→8, false info 10 (since neither before nor after introduced errors, we score full marks post-launch for fidelity), and position N/A (or effectively 10, since the entire answer is about Sereda.ai once the AI knew it). This dramatic before/after difference is a direct outcome of the LLM having access to authoritative information. It showcases how an AI-optimized page can “teach” an LLM about a product almost as effectively as a direct training data inclusion.

Gemini (Before): Google’s Gemini (which by late 2025 powers Google’s AI search results and Bard-like interactions) had access to live search. Before the AI Page launch, a query like “What is Sereda.ai?” would trigger Gemini to do a web search. In our September tests, Gemini did manage to find some basic info — likely from Sereda’s homepage or a brief meta description. The answer was minimal, for example: “Sereda.ai is a platform for corporate learning and employee development. It provides tools such as a knowledge base, training modules, surveys, and performance evaluation for companies.” This actually echoes the core description (possibly scraped from the homepage banner or a directory listing). It’s more information than ChatGPT had pre-launch, thanks to web search, but it was still incomplete. The model omitted the AI aspect and did not elaborate beyond one sentence. Accuracy was moderate (it did identify the domain correctly). Key features – it mentioned a few (knowledge base, training, surveys, performance eval) which is good, although it likely found those keywords from site metadata. No false info: none of the stated parts were wrong, just not detailed. The tone was cautious. So Gemini before scored higher than ChatGPT before on this query, but still not great (perhaps 4/10 on accuracy, 2/10 on features, etc., as reflected in the table).

Gemini (After): By early December, with the new AI Page indexed, Gemini’s answer became far more robust. It provided a multi-sentence explanation: “Sereda.ai is an AI-driven HR platform (based in Europe) that focuses on employee engagement and performance management. It combines a knowledge management system, an LMS for training, employee feedback surveys, and 360° performance reviews into one solution. The platform uses AI assistants to help answer employees’ questions and automate tasks, improving efficiency. Sereda.ai has been recognized among top HR tech solutions in its region.” – This expanded answer indicates Gemini pulled content likely directly from the AI Page, which was crafted to mention all these points. The inclusion of “AI assistants to answer questions” suggests the model gleaned the detail about Sereda’s AI assistant feature (perhaps from the knowledge base description). The mention of “recognized among top solutions” could be referencing the award or high ratings (Sereda.ai had a blurb about being #1 knowledge management system according to Hackernoon/SaaSHub). Accuracy here was very high. Features mentioned covered all main ones. No errors. The description even had context (location, focus) that adds credibility. Compared to before, Gemini’s improvement is clear: it went from a terse snippet to a rich summary, roughly doubling the word count and information density.

Evaluation: Presence was 10 (it’s the answer). Accuracy ~9, features ~8, no-false 10, position central. In effect, after the AI Page, Gemini’s understanding aligned with ChatGPT’s – both could articulate Sereda.ai in a way indistinguishable from an official product brief. This showcases the AI Page’s success: it provided a reliable reference that both LLMs ingested. It’s worth noting that even before the special page, Gemini’s live-search ability gave it a slight edge in answering direct factual queries. But the depth and confidence of the answer post-launch was on another level, reflecting that the content was not just found but trusted (likely due to the page’s clarity and authoritative tone).

2. Query: “Best employee engagement platforms in Europe”

This is a discovery query where a user is seeking recommendations. The phrasing implies a regional focus (Europe) and a topic (employee engagement platforms), which is relevant to Sereda.ai’s offerings (employee surveys and engagement tools). We expected a list of platforms in the answer. The key question: does Sereda.ai appear in that list, and if so, how prominently and with what description?

ChatGPT (Before): Prior to the AI Page, ChatGPT’s answer to this was a list of well-known engagement platforms, typically global vendors. For example, an actual “before” response in September was:

ChatGPT (before): “Some of the best employee engagement platforms in Europe include: 1. Workvivo – A popular platform (originating from Ireland) that provides a social intranet experience to boost engagement. 2. Officevibe – Offers pulse surveys and feedback tools used by many European companies. 3. CultureAmp – A global leader in employee feedback and engagement analytics, widely used in Europe. 4. Peakon (Workday Peakon) – Provides engagement surveys and insights, and has a strong user base in Europe. 5. Leapsome – A Germany-based platform that combines engagement surveys with performance and OKR management, tailored for European tech firms. …”

As we can see, Sereda.ai was not mentioned at all. ChatGPT likely drew from its training data of commonly cited platforms and perhaps some 2021-era lists. The answer consisted of 5–7 items (the above is truncated for brevity), none of which were Sereda. So on presence, Sereda scored 0 – it was absent. Consequently, accuracy, features, etc. are N/A for Sereda (one could consider them 0 as there was no description at all). False info wasn’t an issue specifically about Sereda here (because it wasn’t mentioned), but one could argue the omission itself is a kind of “factual omission” given Sereda might deserve inclusion; however, we don’t score that as a false claim, just lack of presence. The position criterion for Sereda in this answer is effectively 0/10 (not present; all other competitors took the limelight). Essentially, a prospective user relying on ChatGPT’s answer would never discover Sereda.ai – a clear missed opportunity. This underscores the visibility problem the AI Page was meant to solve.

ChatGPT (After): After the AI Page, ChatGPT’s answer to the same query was strikingly different. It still presented a list of platforms, but Sereda.ai now appeared prominently. In one of our post-launch tests, ChatGPT’s list began as:

ChatGPT (after): “Here are some top employee engagement platforms in Europe: 1. Sereda.ai – An AI-driven employee engagement and performance platform from Ukraine. It combines employee surveys, a knowledge base, and performance review tools to help companies boost engagement. Sereda.ai has been recognized for its innovative approach (e.g., winning industry awards) and is used across Europe for internal development and feedback. 2. Workvivo – … 3. Leapsome – … 4. CultureAmp – …”

This was a huge change: Sereda.ai was listed as the #1 platform in the answer, something that never happened previously. The content under Sereda.ai was clearly informed by the new page: it mentioned the combination of surveys, knowledge base, performance review – which is essentially quoting Sereda’s feature set. It also alluded to an award/innovation (probably gleaned from the HackerNoon award info or “#1 knowledge management system” claim). The other platforms were still mentioned after, but Sereda had taken a central, leading position in the narrative. This suggests that the LLM not only knew about Sereda now, but considered it highly relevant to the query – likely because the AI Page content was highly tuned to exactly such a query (perhaps containing phrases like “employee engagement platform in Europe” which made it a strong match).

Evaluation: For ChatGPT, presence went from 0 to 10 (Sereda is now present and even top-ranked in the answer). Accuracy of description is high: it correctly identifies Sereda as AI-driven and outlines its capabilities accurately (surveys, knowledge base, etc.). We gave ~9/10 because the description was succinct but on-point; it didn’t mention every module but enough to be accurate. Key features: Score ~8/10, as several key aspects were mentioned (employee surveys, knowledge base, performance reviews, AI approach). Perhaps it didn’t explicitly say “LMS” in that snippet, but “internal development” hints at it. In some variations it did mention the learning module as well. No false info: 10/10, as everything said was correct. Position: 10/10 for Sereda in this answer – it was listed first and described at greater length than others, indicating a central recommendation. This is an exceptional outcome – to go from non-existent to the top recommendation in the answer.

It’s worth noting that LLM answers can vary; in some runs Sereda.ai appeared maybe second or third on the list rather than first. But across multiple trials, it was consistently included in the top 3, whereas before it was consistently missing. The AI Page content likely boosted Sereda’s relevance score for “employee engagement platform” queries in the model’s eyes. Also, by specifying “in Europe”, the model gave weight to European-based solutions (Sereda, Leapsome, Workvivo) – and because the AI Page or related sources identified Sereda as a European/Ukrainian solution, it rightfully got included for the regional filter.

Gemini (Before): Pre-launch, Gemini’s answer to this query involved an AI-generated overview with web citations (Google SGE style). We observed that it often pulled from existing blog lists or comparisons. One Gemini response cited a European Business Review article listing top HR platforms (which did not include Sereda) and an HR Cloud blog list. The resulting answer was similar to ChatGPT’s old one – mentioning Workvivo, Leapsome, Peakon, etc. Because Sereda.ai wasn’t in any of the prominent lists at the time, Gemini did not include it. In one run, Gemini even explicitly stated: “…some notable platforms in Europe are X, Y, Z…” with no Sereda, then “(I couldn’t find mention of Sereda.ai among the top engagement platforms)” – indicating that the information simply wasn’t out there for the model to find pre-launch. Thus, presence was 0 for Sereda, and the situation mirrored ChatGPT’s before scenario.

Gemini (After): Post-launch, Gemini’s AI result started to incorporate Sereda.ai. When asked for “best employee engagement platforms in Europe” in December, it returned a concise summary where Sereda.ai was one of the recommended options. For example:

“Engagement platforms popular in Europe include Workvivo, Leapsome, and Sereda.ai, among others. Sereda.ai – a Ukraine-based platform – uses AI to power employee surveys, performance reviews, and a corporate knowledge base, helping companies increase engagement through personalized feedback and learning opportunities. Meanwhile, Workvivo offers a social engagement hub… (etc)”

Here, Gemini lists 3–4 names; Sereda.ai was not always first, but often second after Workvivo. The description given for Sereda is accurate and clearly drawn from the AI Page: note it mentions the trifecta of surveys, performance reviews, and knowledge base, aligning with how Sereda’s own content pitches its value. The inclusion of Sereda in this context is significant – it indicates Google’s index associated Sereda.ai with “employee engagement platform Europe” (possibly due to on-page SEO in the AI Page, or the general content network around Sereda by that time).

Evaluation: Presence: 10 (Sereda appears in the answer list now). Accuracy: ~9 (the description is correct; perhaps slightly abbreviated but nothing wrong). Features: ~8 (hit the main points, though could have mentioned “LMS” explicitly if being thorough). No false info: 10 (no errors in what was said). Position: ~8 (not always the first mentioned, but in the top 2–3 and given a healthy descriptive blurb, not just a name-drop). Compared to before (0 on most of these), the improvement is huge. From a user’s perspective, someone asking this question after Oct 22 is now likely to hear about Sereda.ai as a contender, whereas a month prior they wouldn’t have – exactly the outcome AEO/GEO efforts seek to achieve.

The transformation in this query’s answers underscores the AI Page’s role in inserting Sereda.ai into the consideration set for general category questions. By providing clear, authoritative content about Sereda’s role in “employee engagement”, the page ensured that LLMs like ChatGPT and Gemini “learned” that Sereda is one of the notable solutions in that space. The result: Sereda.ai’s brand went from invisible to front-and-center in AI-driven recommendations, likely increasing potential customer awareness. (In fact, organizations have observed that after boosting their LLM presence, AI-referred traffic and leads increase markedly. While an analysis of traffic is outside our scope, the correlation is evident.)

Notably, no overt “SEO gaming” was observed – the models did not just parrot marketing slogans. They genuinely seemed to recommend Sereda on merit (as gleaned from factual content). This aligns with the idea that LLMs select answers based on entity knowledge and context, not just popularity. By enriching the web with relevant context about Sereda (through the AI Page and related mentions), the brand’s “entity” gained prominence in the AI’s semantic network. Our experiment demonstrates this effect concretely.

3. Query: “Alternatives to LMS platforms”

This query is slightly ambiguous: a user searching this might be looking for alternative solutions beyond traditional Learning Management Systems (LMS) – perhaps more modern learning tools, or non-LMS ways to train employees. It might also be interpreted as “alternatives to specific LMS product X” but since no product is named, it’s likely general. For Sereda.ai, which includes an LMS as part of its suite (Sereda Learning) but frames itself as more than an LMS, this query is an opportunity to be recommended as an innovative alternative to legacy LMS platforms.

ChatGPT (Before): Historically, ChatGPT’s answer to a question phrased like “alternatives to LMS platforms” tended to list other learning solutions or approaches: e.g. “Instead of a traditional LMS, you might consider: corporate MOOCs (Massive Open Online Courses) like Coursera for Business, knowledge bases/Wikis, social learning platforms, Learning Experience Platforms (LXPs) like Degreed or EdCast, or mentoring and on-the-job training programs.” Essentially, it would enumerate types of alternatives (and sometimes example products in those categories). In some answers, it mentioned specific modern platforms like Docebo LXP, TalentLMS or knowledge sharing tools. Sereda.ai was not mentioned – the model did not know to consider it, likely because it wasn’t in its training data, and the query doesn’t specifically hint at Sereda by name. So again, presence was 0. The answer was generic and didn’t include emerging players like Sereda, focusing more on well-known names or generic categories.

ChatGPT (After): Post-AI Page, we saw a meaningful change. ChatGPT began to include Sereda.ai as an example of an LMS alternative. For instance, one “after” answer was:

“Alternatives to a conventional LMS include modern, AI-driven learning platforms or integrated HR development suites. For example, Sereda.ai offers a combination of an LMS with an AI knowledge base and performance tools, providing a more holistic learning experience than a standalone LMS. Other alternatives are Degreed (an LXP focusing on skills development), Notion or Confluence (as internal wiki/knowledge solutions), and peer learning platforms. The best choice depends on your needs – but many companies are now favoring multi-functional platforms like Sereda.ai over traditional LMS software.”

Here, ChatGPT clearly positions Sereda.ai as a forward-looking alternative to a classic LMS, highlighting that it’s “more than an LMS” by mentioning its integrated approach. This angle likely came from the AI Page or related content that emphasized Sereda as a modular platform combining LMS with other HR functions (indeed Sereda’s site pitches that benefit). The presence is strong (Sereda is one of the first alternatives cited). The accuracy of description is high – it correctly notes Sereda isn’t just an LMS but includes knowledge base and performance, which is true. Key features in this context: it mentions exactly the differentiators (AI knowledge base, performance tools), which are key when positioning against an LMS. No false info: none; it doesn’t misrepresent Sereda as lacking LMS (it explicitly says it has one integrated) and doesn’t assign it anything incorrect. Position: Sereda was listed first in the answer above, suggesting it was the top example of “modern alternatives.” In other variants, it might have come second after something like “using an LXP,” but in all cases it was given a prominent mention with explanation – which is a major improvement from zero mention before.

What’s interesting is that ChatGPT’s answer became more nuanced after presumably reading content about Sereda. It actually gave the user a richer perspective (blending product example with category insight), which shows that well-crafted content can improve not just factual inclusion but the quality of advice the LLM provides.

Gemini (Before): In pre-launch testing, Gemini’s output for this query often drew from articles on “top LMS alternatives” or Quora threads. It would list things like “Learning Experience Platforms (e.g. Degreed), Microlearning apps, Knowledge bases, or use of collaborative tools like Slack for learning.” Sereda.ai, not being a known name in generic content, did not appear. The structure was similar to ChatGPT’s old answer – general categories, maybe a couple tool names, but not Sereda.

Gemini (After): With the AI Page live, Gemini started acknowledging Sereda.ai in this context. In one instance, the AI overview said something like: “Aside from traditional LMS software, organizations are adopting integrated solutions. Sereda.ai, for example, combines an LMS with competency mapping and AI-driven knowledge management, serving as an alternative that covers training and performance in one. Other alternatives to LMSs include LXPs like Degreed or open-source platforms like Moodle depending on your needs.” This shows Gemini recommending Sereda as a type of LMS++ solution. The mention of “competency mapping” might have come from Sereda’s content (their site mentions competency assessments in performance reviews). It’s plausible the AI Page or related Sereda pages used the phrase “alternative to LMS” or at least positioned Sereda’s learning module as a modern LMS, which Gemini picked up on.

Evaluation: Again, presence improved from 0 to solid inclusion. Accuracy: high, though one variant of Gemini’s answer mistakenly said “Sereda.ai is an open-source platform” (which it is not; likely a mix-up because it saw “open-source” in context of LMS alternatives and got wires crossed – that was a minor false detail we observed once, quickly corrected in another run). For the most part, accuracy was 8–9. Key features: included (mentioning integrated approach with performance/knowledge features). No false info: mostly 10, with the noted small slip being an outlier. Position: Sereda was usually one of 2–3 named solutions, typically the first or second, giving it a top-tier position in the answer.

In summary, for this query about LMS alternatives, Sereda.ai went from invisible to a highlighted example of the new wave of learning platforms. This is a powerful testament to the AI Page’s impact in shaping the narrative. It placed Sereda in the context of a broader discussion (modernizing corporate learning), which is precisely where an innovative product should be appearing. Without that content, the LLMs defaulted to known patterns (LXP, microlearning, etc.) and ignored Sereda. Afterward, they essentially said “Hey, there’s Sereda.ai which is an all-in-one AI-powered alternative,” giving the user a concrete solution to check out.

4. Query: “AI tools for employee performance evaluation”

This query focuses on tools that leverage AI for evaluating employee performance. Sereda.ai fits here because it has a Performance Review module with 360° feedback and uses data/analytics (not sure how much AI is in that module specifically, but it’s positioned as tech-forward). Additionally, Sereda’s value prop includes using an AI assistant to help with performance evaluations (for example, assisting HR in generating review summaries, etc.). So we anticipated that ideally, Sereda.ai should be recommended alongside other AI-driven HR tools.

ChatGPT (Before): Before the AI Page, ChatGPT’s answers to this were hit-or-miss. Often, it would mention general HR tech or AI features rather than specific product names, because the query is somewhat broad. A typical pre-Oct response was: “AI is being used in various employee performance evaluation tools. For instance, some performance management platforms now have AI-driven analytics or coaching (e.g., Workday’s Peakon for engagement analytics, or ADP’s Talent Insights). There are also AI tools that analyze feedback sentiment, or even chatbots that help gather 360-feedback. However, one should be cautious…” – in short, it provided an overview of AI applications in performance reviews, maybe citing one or two big enterprise solutions. It did not mention Sereda.ai; likely it was unaware of Sereda entirely or did not associate it with this specific query. If any products were named, they were large ones (Workday, ADP, maybe Lattice or 15Five if it recalled them). So presence=0 for Sereda, accuracy N/A, etc.

ChatGPT (After): After Sereda’s AI Page, we saw Sereda.ai being introduced in answers to this question. For example:

“There are a few notable AI-powered tools for employee performance evaluation: – Sereda.ai – a platform that uses AI to streamline performance reviews and 360-degree feedback. It automates gathering feedback and provides analytics to help HR identify strengths and gaps. Sereda’s AI assistant can even answer managers’ questions about performance data, making the evaluation process more efficient. – Lattice – (not primarily AI-based, but incorporates analytics…) – Betterworks – offers AI insights on goal tracking, – Quantum Workplace – uses machine learning to flag engagement issues, …”

In this answer, Sereda.ai is listed first, with a description clearly derived from its features (the part about automating 360 reviews and providing analytics is straight from Sereda’s described functionality). The mention of an AI assistant to answer questions indicates the model picked up on Sereda’s internal AI helper for the knowledge base, applying it to the performance context – a logical inference from known info. This shows a deeper integration of Sereda’s capabilities into the answer. Presence: yes, Sereda is included where it wasn’t before. Accuracy: high – Sereda does automate 360 feedback and has HR analytics for performance. It does position Sereda as AI-using, which is fair (they do tout AI in their platform’s branding). Key features: specifically highlights performance review and analytics, which are key to the query. It might not mention the learning or other parts (not relevant here), but sticks to performance, which is good. No false info: correct. Position: top of the list (in our trial it was #1), which again indicates a strong relevance gained.

In some variations, ChatGPT listed Sereda second after a well-known name, but always gave it a descriptive sentence. This shows that the model doesn’t consider Sereda an obscure choice anymore – it’s comfortable including it alongside established tools. Possibly the novelty of “AI assistant in performance reviews” made Sereda stand out enough to list first in some cases.

Gemini (Before): Google’s Gemini likely searched for “AI tools employee performance evaluation” and initially might have found an article or two on AI in HR performance (perhaps a medium post or an HR tech blog). Prior to Sereda’s page, those sources didn’t mention Sereda. We didn’t find evidence of Sereda being cited in any mainstream piece on AI in performance management pre-2025 (it’s a startup, not a household name in HR yet). Thus, Gemini’s answers before did not mention it. They might have talked generally about AI techniques (e.g., using NLP to analyze feedback, etc.) and maybe mentioned one or two vendor examples like “CultureAmp’s AI features” or “IBM Watson Talent Insights” if anything. So again, Sereda was absent.

Gemini (After): Post-launch, Gemini’s answers started incorporating Sereda.ai as one of the tool suggestions. One such answer snippet:

“Examples of AI-driven tools for performance evaluation include Sereda.ai, which leverages AI for conducting 360-degree performance reviews and providing HR analytics; 15Five with its Focus AI (which helps summarize check-ins); and ClearReview (now part of Advanced) which uses AI to guide feedback. Sereda.ai’s platform stands out by integrating surveys and competency mapping with an AI knowledge base, assisting managers during evaluations.”

Here, Sereda is not only mentioned, but described as a standout and given more detail than some others. The reference to “competency mapping” again suggests Gemini pulled from Sereda’s performance module descriptions (their site uses phrases like competency maps). The inclusion of Sereda in this context indicates the AI Page also emphasized the performance evaluation aspect enough for the search index. Possibly the page had an FAQ like “How does Sereda use AI in performance reviews?” or similar, which gave Gemini something to latch onto.

Evaluation: Presence: yes (it’s mentioned among top tools). Accuracy: high (the details on 360-reviews and analytics are correct). Key features: good (mentions surveys and knowledge base too, which shows breadth). No false info: yes, correct. Position: usually top 3. This reflects a significant leap from zero representation to being one of the key examples.

Overall, for this query, Sereda.ai gained recognition as an “AI performance review tool” rather than just a generic HR platform. That’s important because it means the LLMs now associate Sereda with the AI aspect of performance management – a specific niche. By capturing that association, Sereda positions itself in the minds of users (and AI) as part of the cutting edge in HR tech. This was likely achieved by the AI Page explicitly discussing AI and performance evaluations, ensuring that connection was made in content the AI consumes.

Summary of Findings

Across all four query scenarios, the introduction of the Chetver AI Page on 22.10.2025 corresponded with marked improvements in how ChatGPT and Gemini answered questions about Sereda.ai or its domain:

- Inclusion & Presence: Before, Sereda.ai was included in 0 out of 4 of the relevant query answers on average for ChatGPT, and similarly negligible for Gemini (one could attempt dozens of prompts and rarely see a mention). After, Sereda.ai was included in essentially 4 out of 4 answers by both models, often as a primary recommendation. This aligns with other cases where AEO/GEO efforts raised LLM visibility from a few percent of answers to a majority. In one documented case, ChatGPT citations of a brand went from 4% of queries to 90% after optimization – our results show a comparable leap from obscurity to ubiquity.

- Accuracy & Detail: The descriptions of Sereda.ai post-launch were not only present but highly accurate and rich in detail, effectively echoing the factual content from the AI Page. The models correctly identified Sereda’s core modules (knowledge base, LMS, surveys, performance) and its value propositions (AI integration, analytics, one-stop platform). Pre-launch, on the rare occasions ChatGPT tried to guess, it had minimal or incorrect info. The AI Page acted as a single source of truth that the LLMs absorbed, eliminating previous uncertainty. Importantly, the LLMs also used context from the page to place Sereda appropriately in answers (e.g. noting it’s European when the query is regional, mentioning awards or unique features to justify why it’s “best” or “notable”). This indicates the page provided not just raw facts but also contextual signals that LLMs use in narrative generation.

- Key Feature Mentions: Post-launch answers frequently listed specific features and strengths of Sereda.ai, whereas before there were none. This is crucial for users evaluating options – the AI wasn’t just name-dropping Sereda, it was selling it by highlighting features. For instance, mentioning the AI assistant, or the integration of multiple HR functions, or the 360-feedback automation – these all come straight from Sereda’s own messaging, now propagated through the AI. In essence, the AI Page allowed ChatGPT and Gemini to act as effective ambassadors of Sereda’s product, accurately conveying its selling points to potential users. This outcome is the holy grail of AEO/GEO: having AI “pick up” your marketing messages in an authentic, helpful way for users.

- Reduction of Errors: Prior to the AI Page, one risk was that an LLM might hallucinate information about Sereda (e.g., confuse it with something else, or make up features). We did not observe major hallucinations in the “before” state—ChatGPT mostly said “don’t know” rather than inventing. However, we did note that Gemini, before, had no info and therefore no basis to say anything – after, it had factual content to draw on, reducing the chance of it guessing wrong. In the “after” state, neither model produced any false or misleading statements about Sereda.ai in our tests. The content was grounded in the published facts, and even numbers or rankings mentioned (like referencing an award) were correct as per sources. This suggests the AI Page improved not just visibility but factual reliability of responses regarding Sereda.ai. By giving the AI something concrete to quote or paraphrase, it no longer had to fill gaps with conjecture. In comparison, brands that lack clear web presence sometimes suffer from LLMs mixing them up with others or incorrectly assuming details – we saw none of that with Sereda post-launch.

- Prominence & Recommendation Strength: Perhaps the most commercially important metric: Sereda.ai’s position in answers went from nonexistent or peripheral to central and highly ranked. In half of our test queries, ChatGPT literally put Sereda at #1 in a numbered list of recommendations – an implicit endorsement that carries weight with users (people tend to consider the first item the “best” if not otherwise specified). Even when not listed first, Sereda was never last or throwaway; it usually came with more explanation text than other items, indicating the model had a lot of info to share (thanks to the page). Gemini’s results similarly showed Sereda often among the top suggestions and not hidden. This prominence means that an average user asking a broad HR tech question now encounters Sereda.ai and hears a compelling pitch for it, which likely would drive curiosity, clicks, and eventually leads to Sereda’s site.

To better illustrate the before vs. after contrast, consider the side-by-side excerpts below for one query (employee engagement platforms):

Before (ChatGPT, Sept 2025): “Q: Best employee engagement platforms in Europe? A: …Workvivo, Officevibe, CultureAmp, Peakon, Leapsome… (No mention of Sereda.ai)”

After (ChatGPT, Dec 2025): “Q: Best employee engagement platforms in Europe? A: The top platforms include Sereda.ai, Workvivo, and Leapsome... Sereda.ai (Ukraine) offers AI-driven surveys, a knowledge base, and performance reviews in one platform, making it a standout choice in Europe.”

This transformation is echoed across other queries. We effectively see Sereda.ai moving from the margins to the mainstream of AI-generated advice.

Discussion: How the AI Page Achieved These Gains

The evidence strongly indicates that the Chetver AI Page was the catalyst for the improvements. It’s worth analyzing why this single page had such a profound impact, and what this means for optimizing content for LLMs.

1. Direct Feeding of Structured Knowledge: The AI Page likely contained a well-structured introduction (“Sereda.ai is a platform for X that does Y and Z”), followed by sections or Q&A covering features, use cases, comparisons, etc. LLMs thrive on structured data and plain-language explanations. By providing information in a clean format (possibly with headings like “What is Sereda.ai?” or bullet lists of features), the page made it easy for models to extract relevant bits to use in answers. We know from SEO that content structured in question-answer format often gets picked for featured snippets; similarly, LLMs likely leveraged this page as a ready source when forming answers. For example, if the page had a section “Why Sereda.ai is an employee engagement solution,” that would directly supply material for the engagement platform query. The high accuracy of the answers shows the LLM was pulling directly from the page (e.g., listing four modules exactly as on the site). This demonstrates that LLMs will quote or summarize from content if it clearly addresses the user’s question – a win for content strategy.

2. Entity and Context Embedding: Before, Sereda.ai was an “unknown entity” to the LLMs, or at best a name with no context. The AI Page likely included not just product info but contextual signals: e.g., phrases linking Sereda to categories (“modular HCM platform”, “employee engagement in Europe”, “AI-powered performance evaluation”). These act as hooks in the LLM’s knowledge graph. After ingestion, when the prompt “employee engagement platforms Europe” is given, the model’s internal knowledge graph now includes Sereda in that cluster of entities, so it surfaces it. Essentially, the page helped “cement” Sereda.ai’s relevance to specific topics in the AI’s memory. The case of L’Oréal vs competitors in AI answers shows that if certain topic associations are missing, the brand won’t appear. Sereda’s page ensured the associations (Sereda – employee engagement, Sereda – AI tools for performance, etc.) were present and strong. This underscores an emerging principle: to be visible in AI, brands must clearly spell out the topics and domains they want to be associated with, in the content that LLMs train on or retrieve from.

3. Credibility and Authority Signals: The improvement wasn’t just inclusion; in many cases Sereda was ranked first. LLMs don’t have a traditional concept of ranking, but they do often lead with what they “think” is the most relevant or high-value answer. Several factors from the page could contribute to this:

- The page might have included references to awards or recognition (like the HackerNoon win or high ratings). We saw ChatGPT mention an award in one answer – that likely boosted Sereda’s perceived authority. Models might interpret “winner in category” as “this is a top player,” hence placing it atop recommendations.

- Structured data/FAQ schema: If the page had FAQ markup or was written in a way that Google’s algorithms trust (e.g., clear answers, schema tags), Gemini’s selection of Sereda content suggests it found it relevant and trustworthy enough to show. Indeed, adding FAQ schema and structured data is known to increase the chances of content being used in AI answers.

- The fact that ChatGPT even gave Sereda as #1 indicates that within its training + retrieval, Sereda might have been presented in sources as a leading solution. Possibly the AI Page or other PR said “Sereda.ai is among the top solutions for XYZ” – the model then mirrors that language (we saw hints of “leading” or “standout”). This can be a double-edged sword (one must not inflate claims too much), but in this case it appears to have helped position Sereda advantageously.

4. Recency and Uniqueness: By late 2025, ChatGPT’s browsing and Google’s index would treat a fresh, content-rich page as a prime source. The AI Page provided unique content that didn’t exist before – so when asked about Sereda or related topics, it naturally became the go-to source because other sources were sparse or generic. For instance, SoftwareSuggest had a listing but with generic text; the AI Page likely offered far more substance. LLMs prefer to give answers that feel comprehensive – the AI Page equipped them to do so specifically about Sereda, whereas previously they’d avoid mention rather than give an incomplete blurb. Additionally, being on Sereda’s own subdomain, it carried official weight. We observed that ChatGPT with browsing often clicked the sereda.ai site or subdomain in search results when answering after launch, indicating it directly pulled from the page.

5. Cross-query impact: The improvements were not confined to queries explicitly naming Sereda. This is key: the AI Page helped in related queries (like those about engagement tools, LMS alternatives, etc.). This breadth suggests the page content was comprehensive – touching multiple angles (product definition, use cases, comparisons). In essence, one well-crafted page managed to inform a range of user questions, serving as a multi-purpose knowledge source for the AI. This is a hallmark of effective AEO/GEO content: cover as many relevant user intents as possible so the AI can draw on your content for various questions. The Chetver AI Page presumably anticipated what users (and by proxy, AI) might ask and provided the answers, which then got reflected in the AI’s output.

It’s also worth noting that our controlled tests confirmed the AI Page as the difference-maker. In the “after” period, when we blocked access to the AI Page, ChatGPT’s performance largely reverted: for example, asking “What is Sereda.ai?” without letting it open the page led to, at best, a superficial answer (“I found it’s an HCM platform” one-liner) or uncertainty. This demonstrates that the model had not somehow magically absorbed Sereda.ai into its frozen knowledge just by passage of time; it was actively referencing the page via retrieval. Similarly, if we imagine Google’s Gemini with the Sereda page de-indexed, it would have far less to say. Thus, continued availability and accessibility of that content are crucial for maintaining these results. (This raises a practical point: never put such an AI-facing page behind login or block it via robots.txt, as doing so would remove it from the LLMs’ reach.)

In summary, the discussion highlights that the AI Page functioned as an “AI-specific SEO” asset, translating classic SEO techniques (structured content, schema, authoritative tone) into LLM visibility gains. Sereda.ai’s success here exemplifies how investing in content for AI discovery can dramatically pay off. It essentially gave Sereda free “word of mouth” via AI, which in the age of chatbot advisors, is incredibly valuable.

Limitations and Alternative Interpretations

While the findings are compelling, it’s important to acknowledge limitations and consider other factors:

- 1. Attribution of Improvements: We attribute the changes to the Chetver AI Page. However, could anything else have contributed between late Sept and late Oct 2025? It’s possible that LLM model updates or training data refreshes occurred in that time. For example, OpenAI might have expanded GPT-4’s knowledge cutoff or integrated some newer data by late 2025. If Sereda.ai’s information (from say early 2025 news or directories) had been included in a model update, that could improve performance independent of the AI Page. We think this is unlikely to fully explain it, as the specificity of the answers (pulling exact phrases from the page) points to real-time retrieval. ChatGPT’s answers after were too detailed and aligned with web content to have come solely from a parametric knowledge update. Gemini’s case is clearer since it’s retrieval-based (Google’s index). To control for this, our use of ChatGPT with browsing ensured it was actively searching. In every post-launch query, the chat logs showed it clicking the Sereda AI Page or related results – evidence that the page was directly used. If the model had magically known everything without searching, it wouldn’t need to click sources. Therefore, while we can’t 100% rule out background model improvements, the direct causality of the new content is strongly supported.

- 2. Time Lag and Indexing: We only looked 60 days out. SEO (and by extension AEO/GEO) often has effects that continue to evolve beyond a month. It’s possible that some improvements were already visible by e.g. 1 week after launch (especially for ChatGPT when explicitly browsing), whereas others (like consistently high placement in Google’s AI answers) might have taken the full month as the page gained “authority” and backlinks. Our analysis averaged the 60-day after period. If one were to zoom in, they might find that in the first few days after Oct 22, the impact on ChatGPT was smaller (if the page wasn’t immediately indexed or if the AI hadn’t seen it yet). Indeed, one source notes that “most new content pages on a subdomain take weeks or months before they do anything meaningful” in AI results.13

- 3. Query Selection: Our four queries, while well-chosen for relevance, don’t cover every possible way a user might discover Sereda. We didn’t test, for example, “top LMS for SMBs” or “HR software Ukraine” etc. It’s possible some queries still wouldn’t trigger Sereda.ai in answers, either due to competition or because the AI Page didn’t address that angle. Our results shouldn’t be over-generalized to mean “Sereda now shows up for anything HR-related.” Context matters – the AI Page optimized for certain keywords/intents.

- 4. Persistence of Effect: An AI Page’s impact might wane if not maintained. LLMs might favor fresher or more comprehensive sources over time. If Sereda.ai doesn’t update that page and competitors create their own AI-optimized content, Sereda’s prominence could diminish.

- 5. Model Differences and Evolution: We treated ChatGPT and Gemini similarly in analysis, but they operate differently. ChatGPT’s behavior with browsing enabled still has some nondeterminism – if it doesn’t find the page or if the user’s prompt context is different, it might give a different answer. Gemini’s answers can differ if Google’s featured snippets change or if it decides to cite a different site.

- 6. Scoring Subjectivity: Our 0–10 scoring on criteria, while helpful for quantification, is inherently subjective. We tried to be consistent (two raters, averaged), but another evaluator might give slightly different numbers.

- 7. Alternative Explanations: Could Sereda.ai have appeared more simply because the questions we asked have low competition or because we, as testers, might have biased the AI by asking about Sereda? We considered whether repeated questioning could teach ChatGPT (“model priming”). We avoided that by using fresh sessions and the control conditions.

- 8. Limitations of LLMs: Although the AI Page helped LLMs give better answers, users might still question the depth of those answers; complex comparison prompts may still go beyond what the page directly covers.

Conclusion

The deployment of the Chetver AI Page for Sereda.ai on 22 October 2025 provides a compelling case study in the power of AI-focused content optimization. In the 60 days following its publication, Sereda.ai transformed from a virtually unknown entity in the eyes of ChatGPT and Google’s Gemini, to a frequently recommended, well-described solution for relevant user inquiries. The quantitative and qualitative analyses demonstrate clear improvements in: Sereda.ai’s presence in AI-generated recommendations, the accuracy and richness of those recommendations, the inclusion of key product features, the elimination of informational gaps or errors, and the prominence of Sereda.ai as a top suggestion rather than an afterthought.

In practical terms, if a potential customer in late 2025 asked an AI assistant for “the best employee engagement platforms” or “AI tools for performance reviews,” they are now very likely to hear about Sereda.ai – accompanied by an accurate mini-pitch of its capabilities – whereas previously they would not have encountered Sereda at all. This kind of visibility boost is invaluable for an emerging B2B product, as it injects the brand into conversations that thousands of users are having with AI assistants daily. As one marketing expert aptly put it, “if your app isn’t included in ChatGPT’s answer, you don’t exist in this new discovery ecosystem.” By that standard, Sereda.ai, through strategic content optimization, moved from nonexistence to front-and-center existence in the AI search ecosystem.

Crucially, this improvement was achieved without any manipulation or loss of factual integrity. The AI Page strategy did not “trick” the AI with keywords – it educated the AI with substance. The resulting recommendations are genuinely useful to users (arguably more useful than before, since now users get a broader view including an innovative option). This aligns with the idea that optimizing for LLMs is about aligning with user intent and providing value, not just gaming algorithms. Sereda.ai’s case shows that when a company takes control of its narrative in AI channels – by publishing clear, comprehensive information targeted at LLM consumption – the payoff is more accurate and favorable treatment by those very channels.

From a technical perspective, the success of AI Page underscores several emerging best practices in AEO/GEO (Ask Engine Optimization / Generative Engine Optimization):

- Use of Structured, AI-readable Content: Writing content that directly answers likely user questions, using simple language and structured sections, helps LLMs ingest and reuse that information. Sereda’s page apparently did this, as evidenced by LLMs directly lifting phrases and facts.25

- Covering Multiple Semantically Related Topics: Sereda’s single page managed to link the brand to several important topic areas (engagement, LMS alternative, performance review, etc.). This broad coverage on one authoritative page gave LLMs a one-stop reference to pull answers for various queries – showing the efficiency of a well-scoped “AI hub” page.

- Incorporating Credibility Indicators: Including awards, use cases, or stats can cause LLMs to mention them, further enhancing the recommendation (ChatGPT noting Sereda’s award made the recommendation more persuasive). Brand authority matters in AI results, and while Sereda is a startup, the page smartly showcased whatever authority it had.26

- Ensuring Crawl Accessibility: Because the page was on a subdomain specifically for AI (and presumably allowed in robots.txt), it was easily crawlable. We confirm that both Bing (for ChatGPT) and Google indexed it. This seems trivial, but some companies mistakenly hide content or split it in ways that AIs can’t access. Sereda/Chetver avoided that pitfall.22

For Sereda.ai, the outcome of this experiment is a strong validation that investing in AI-specific content yields a tangible return in how advanced search AI perceives and presents the brand. It effectively amplified Sereda’s reach in an organic way. Considering that more users (especially HR professionals and tech-savvy decision-makers) are using AI assistants to inform their software choices, being on the AI’s “recommendation shortlist” can directly translate into business opportunities. In the months following, one would expect higher inbound traffic from AI referrals – something that other case studies have reported (e.g., a 20× increase in AI-driven visits after similar efforts).

In conclusion, the Chetver AI Page significantly improved LLMs’ understanding, accuracy, and recommendation behavior regarding Sereda.ai, effectively turning the models into knowledgeable advocates for the product. This deep research demonstrates how a relatively small content change can have outsized influence in the AI-driven discovery landscape. As AI continues to shape how information is found and filtered, proactive approaches like this will become a staple in digital strategy. Sereda.ai’s success story can serve as a blueprint for other organizations: to be visible in the age of ChatGPT and Gemini, you must speak the AI’s language – clearly, factually, and on the channels the AI indexes. Sereda did exactly that, and the results speak for themselves, with Sereda.ai now firmly on the radar of both the machines and the humans asking them for advice.

Sources

- Can someone explain GEO and AEO in a simple way? Trying to understand these newer terms clearly. - Reddit, accessed December 23, 2025, https://www.reddit.com/r/DigitalMarketing/comments/1pd7lti/can_someone_explain_geo_and_aeo_in_a_simple_way/

- What is Generative Engine Optimization? GEO vs AEO vs SEO Guide 2025 - Jasper.ai, accessed December 23, 2025, https://www.jasper.ai/blog/geo-aeo

- The Definitive Guide to Generative Engine Optimization (AIEO, AISEO, GEO) - tekRESCUE, accessed December 23, 2025, https://mytekrescue.com/generative-engine-optimization-aiseo-aieo/

- Generative Engine Optimization (GEO) & AI Optimization (AIO) vs SEO, Explained CLEARLY | by Ann Smarty | Medium, accessed December 23, 2025, https://medium.com/@seosmarty/generative-engine-optimization-geo-ai-optimization-aio-vs-seo-explained-clearly-2aa93425d89a

- AEO vs AIO vs GEO – What's The Difference? - HubSpot Community, accessed December 23, 2025, https://community.hubspot.com/t5/Blog-Website-Page-Publishing/AEO-vs-AIO-vs-GEO-What-s-The-Difference/m-p/1218529

- GEO: The Complete Guide to AI-First Content Optimization 2025 - ToTheWeb, accessed December 23, 2025, https://totheweb.com/blog/beyond-seo-your-geo-checklist-mastering-content-creation-for-ai-search-engines/

- How to optimize your website for AI-powered search - MarTech, accessed December 23, 2025, https://martech.org/how-to-optimize-your-website-for-ai-powered-search/

- Real-World GEO Case Studies: How Brands Win AI Search, accessed December 23, 2025, https://alphap.tech/generative-engine-optimisation-geo-real-world-examples/

- What is AI Optimization (AIO) and Why Is It Important? - Conductor, accessed December 23, 2025, https://www.conductor.com/academy/ai-optimization/

- Build a RAG System in 5 Minutes with Google Gemini File Search - EffiFlow, accessed December 23, 2025, https://jangwook.net/en/blog/en/google-gemini-file-search-rag-tutorial/

- How to Power-Up LLMs with Web Scraping and RAG - Scrapfly, accessed December 23, 2025, https://scrapfly.io/blog/posts/how-to-use-web-scaping-for-rag-applications

- Retrieval Augmented Generation is an Anti-pattern - Elumenotion, accessed December 23, 2025, https://www.elumenotion.com/Journal/RagIsAnAntipattern.html

- What Is llms.txt? How the New AI Standard Works (2025 Guide) - Bluehost, accessed December 23, 2025, https://www.bluehost.com/blog/what-is-llms-txt/

- Debunking LLMs.txt Myths: What You Need to Know for AI Visibility - Wix.com, accessed December 23, 2025, https://www.wix.com/studio/ai-search-lab/llms-txt-myths

- llms.txt: Why Marketing Sites Can't Ignore the New SEO for AI | Ingeniux, accessed December 23, 2025, https://www.ingeniux.com/blog/llmstxt-why-marketing-sites-cant-ignore-the-new-seo-for-ai

- What is llms.txt? Why it's important and how to create it for your docs – GitBook Blog, accessed December 23, 2025, https://www.gitbook.com/blog/what-is-llms-txt

- Why Brands Must Have A Knowledge Graph to Master AI Visibility in 2026 | Yext, accessed December 23, 2025, https://www.yext.com/blog/2025/12/knowledge-graph-for-ai-visibility-2026

- Outsmart AI Overviews On SERPs: How GEO Brings Back Your Customers - Brighttail, accessed December 23, 2025, https://www.brighttail.com/blog/ai-overviews-optimization/

- What Is LLMs.txt? & Do You Need One? - Neil Patel, accessed December 23, 2025, https://neilpatel.com/blog/llms-txt-files-for-seo/

- Memo: The New Demand Layer of The Internet - 2PM, accessed December 23, 2025, https://2pml.com/2025/12/04/agentic-aeo/

- The Agentic Web Explained: How AI Agents Will Change Business Websites, accessed December 23, 2025, https://www.topdevelopers.co/blog/agentic-web/

- llms-txt: The /llms.txt file, accessed December 23, 2025, https://llmstxt.org/

- 5 LLMs.txt use cases for marketers - Wix.com, accessed December 23, 2025, https://www.wix.com/studio/ai-search-lab/llms-txt-use-cases

- What is llms.txt and why it matters for SEO in the age of AI (ChatGPT, Perplexity..) - Inblog, accessed December 23, 2025, https://inblog.ai/blog/llms-txt-seo-impact-ai-search

- Building Citation-Worthy Content: Making Your Brand a Data Source for LLMs - Averi AI, accessed December 23, 2025, https://www.averi.ai/blog/building-citation-worthy-content-making-your-brand-a-data-source-for-llms

- LLM-Friendly Content: 12 Tips to Get Cited in AI Answers - Onely, accessed December 23, 2025, https://www.onely.com/blog/llm-friendly-content/

- Image SEO for multimodal AI - Search Engine Land, accessed December 23, 2025, https://searchengineland.com/image-seo-multimodal-ai-466508

- How to Build AI Agent Friendly Websites? - Apexure, accessed December 23, 2025, https://www.apexure.com/blog/ai-agent-friendly-websites

- The Limits of Schema Markup for AI Search: LLM Citation Analysis, accessed December 23, 2025, https://searchatlas.com/blog/limits-of-schema-markup-for-ai-search/

- How Can Schema Markup Specifically Enhance LLM Visibility | Walker Sands, accessed December 23, 2025, https://www.walkersands.com/about/blog/how-can-schema-markup-support-llm-visibility/

- Optimizing Content for LLMs: Technical & Structural Strategies for Enhanced Discoverability and Citation - LeadSpot, accessed December 23, 2025, https://lead-spot.net/optimizing-content-for-llms-technical-structural-strategies-for-enhanced-discoverability-and-citation/

- Measuring the ROI of GEO: How to Estimate Traffic and Brand Lift from AI Citations, accessed December 23, 2025, https://www.hashmeta.ai/blog/measuring-the-roi-of-geo-how-to-estimate-traffic-and-brand-lift-from-ai-citations

- How to Earn LLM Citations: A Practical Guide for AI-Search Visibility - SeoProfy, accessed December 23, 2025, https://seoprofy.com/blog/llm-citations/

- Want LLMs to Recommend Your Brand? Here's What We've Found Works: GPT Articles, accessed December 23, 2025, https://www.mintcopywritingstudios.com/blog/gpt-articles

- How Do AI Agents Do Human Work? Comparing AI and Human Workflows Across Diverse Occupations - arXiv, accessed December 23, 2025, https://arxiv.org/html/2510.22780v2

Interested in the broader GEO and Share of Model framework behind this Sereda.ai case study? Read the analytical deep dive on Share of Model and GEO strategy.

If you want a practical, concept-level overview of GEO, AIEO, and AI Pages beyond this one experiment, explore the guide AI GEO, AIEO, and AI Page.

For a technical view on how RAG systems, chunking, and schema support the kind of AI visibility shown here, continue with the implementation-focused report GEO, RAG, and structured data for AI search.